Several of xByte's customers have asked if the Dell H710P controller would ultimately limit the performance of a large number of SSD's in a server While it seems that it would be a bottle neck, I didn't have any test data that would show to what extent the H710P would limit SSD array performance I therefore could not definitely advise our customer on the best number of SSD drives to serve their needs In the interest of getting data to better advise our customers, I set up the following test server.

- R620 with the 2.5×10 backplane.

- H710P Controller

- 10 Edge 240GB SSD drives

- VMware 5.5 as a virtual host

- Windows 2012 as a virtual server

- IOmeter and the configuration test file found on Technodrone

First a few words about FastPath I/O

After setting up my VM and testing with a 2 Drive RAID 1 array, results looked good I was able to achieve over 26000 IOPS and 216MBs using the 2 drives and the Real Life access specification Things took a turn for the worse when I tested with a 4 drive RAID 10 array IOPS dropped to below 25000 and bandwidth to 204 MBs Adding an additional 2 drives to the array caused performance to drop even lower I had never seen results like this with spinning disks, so I did some research and found out that the H710P's include a feature called FastPath I/O, specifically for use with SSD's FastPath I/O is enabled on a virtual disk when the read cache is disabled and the write cache is set to write through Rerunning the Real Life tests with FastPath I/O enabled produced the expected result, where an increased number of drives increased performance TheReal Life test is 60% random For 100% sequential tests, FastPath I/O actually decreased performance for smaller arrays It's important to know what type of I/O workload you have and tailor your setup to match.

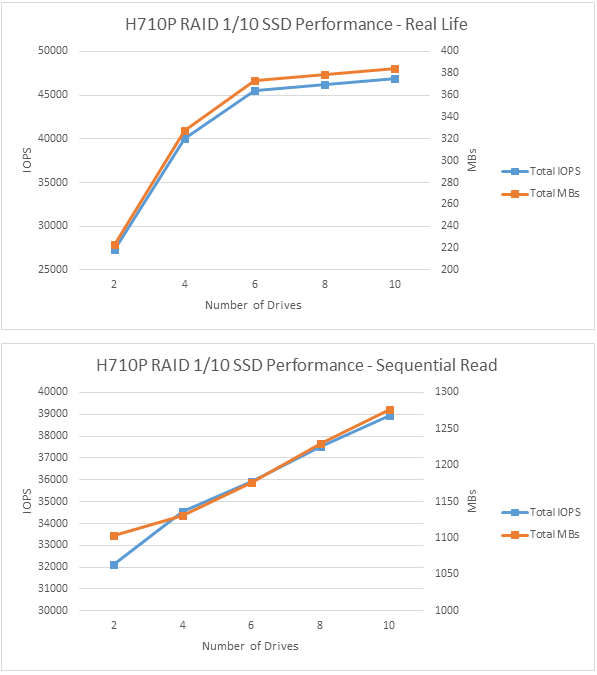

RAID 1/10 Results

Once the details of FastPath were worked out, RAID 1/10 Real Life results were very predictable From 2 to 6 drives in the array, performance increased significantly From 6 to 10 drives, performance still increased, but it was beginning to level off For 2 and 4 disk arrays, performance for 100% sequential I/O was improved by utilizing FastPath For larger arrays, sequential performance was better with FastPath disabled Sequential performance scaled linearly, and as the number of disks increased was seen, no leveling off of performance was seen.

For mixed workloads, adding more than 6 SSD's in a RAID 10 results in diminishing returns. With that in mind it might make sense to use a smaller number of larger SSD's to achieve your goal for usable disk space For very sequential workloads, a larger number of smaller SSD's may make more sense.

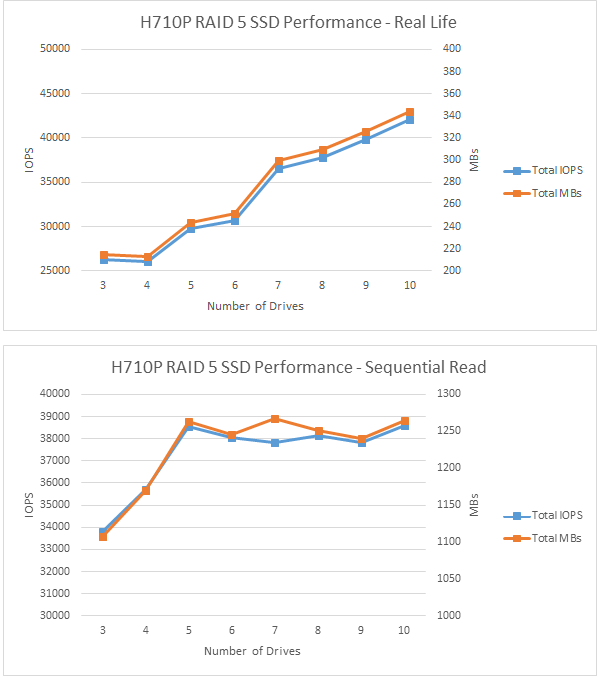

RAID 5

While with spinning disks RAID 5 is no longer recommended, with SSD's it's still a good choice Performance will not match what RAID 1 or 10 can do, but you will not “waste” as much storage With RAID 5 I found that for a 3 drive array it was better to leave FastPath disabled For larger arrays it varied on the workload as to whether it should be enabled or not During the testing I noticed an anomaly when the array contained an even number of drives Apparently, it takes more time to calculate the parity data for an even number of drives Because of that, it was harder to visualize a trend, but unlike the RAID 10, the Real Life performance was more linear for larger arrays and did not level off For sequential read workloads however, performance increased for 3, 4 and 5 drive arrays, then began to decrease slightly.

For mixed workloads and RAID 5 it makes sense to use a larger number of smaller SSD's to achieve your usable space goal For sequential workloads it is better to use a smaller number of large SSD's in RAID as the performance peaks at 5 drives.

Results

I set out to find if the H710P controller would hit a performance limit with SSD drives I found that for a real life mixed workload, the H710P produced diminishing returns for RAID 10 arrays with more than 6 drives For the unusual case of 100% sequential read, RAID 5 performance hit a wall at 5 drives.

Conclusion

Using SSD's in RAID 1/10 and 5 can yield very good performance, but you need to make sure you match your configuration to your workload Without careful consideration of your workload profile, you may not be able to achieve the expected performance The Sales Engineering team at xByte Technologies can assist you in determining the best solution for your needs.